- Google’s Opal lets users create and share mini web apps using only text prompts, backed by a visual workflow editor and optional manual tweaks.

- The platform targets non-technical users and positions Google in the expanding "vibe-coding" space alongside startups and design platforms.

Google Introduces Opal: A Vibe-Coding Tool for Building Web Apps.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Android Introduces “Expanded Dark Mode” to Force a Dark Theme

Google is testing a powerful accessibility-focused feature in the second Android Canary build that forces Dark Mode on apps without native dark themes. Dubbed Expanded Dark Mode, it sits alongside the traditional “Standard” dark theme and brings remarkably better system-wide consistency—though not without caveats.

What’s new in Expanded Dark Mode?

Standard Dark Mode: Applies a dark theme only to Android system UI and apps that support it natively.

Expanded Dark Mode: Extends dark styling to apps that lack built-in dark themes. It works more intelligently than the previous “override force‑dark” option, avoiding blanket color inversion in favor of a more refined approach.

Because this feature is experimental and only available in Canary builds, users may encounter visual glitches in some apps—such as inconsistent colors or layout issues. Google openly cautions users that not all apps will “play nice,” and in such cases recommends switching back to Standard mode .

The rollout timeline for Beta or Stable channels is not confirmed, though speculation places it in Android 16 QPR2 (expected December 2025).

How to Enable Expanded Dark Mode (In Android Canary builds)

If you’re using an Android device enrolled in the Canary channel, here’s how to turn it on:

Step 1. Open Settings.

Step 2. Navigate to Display & touch → Dark theme.

Step 3. You’ll now see two modes:

- Standard

- Expanded

|

| Credit: Android Authority |

Step 4. Select Expanded to enforce dark styling across more apps—even ones without native support.

Step 5. If you notice any display or layout glitches in specific apps, toggle back to Standard mode.

This feature replaces the older hidden “make more apps dark” or “override force‑dark” settings found in Developer Options, offering a cleaner, user-facing placement in the display settings.

How This Update Will Be Useful?

Users who read or browse their phone in low-light environments—such as at night—will find a more consistent, eye-friendly experience even with apps that haven’t been optimized for dark mode.

While Developer Options offered “override force-dark,” Expanded Dark Mode appears to use more intelligent logic to convert UI elements without distorting images or causing widespread visual distortion.

This feature is part of an unstable release. You should expect bugs. Android will let you revert to Standard mode if that improves app stability or appearance .

When it arrives in Beta or Stable under Android 16 QPR2 or later, it could become a key feature for dark‑mode enthusiasts.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

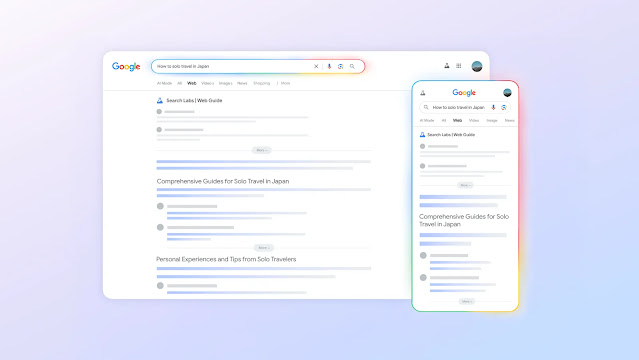

Google Launches AI-Powered Web Guide to Organize Search Results.

- Web Guide uses Gemini AI to organize search results into useful subtopics and related questions within the Web tab.

- The experiment combines AI summaries with traditional links for faster and more intuitive browsing.

What Is Web Guide and How Does It Work?

Why Web Guide Matters.

How To Access the Web Guide?

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Google Acknowledges Home Assistant Glitches, Teases Major Gemini-Powered Upgrades.

- Google admits reliability problems with Home and Nest Assistant and apologizes for user frustrations.

- The company plans significant Gemini-based upgrades this fall to improve performance and user experience.

Google has admitted that its Assistant for Home and Nest devices has been struggling with reliability issues and has promised significant improvements later this year. The announcement was made by Anish Kattukaran, the Chief Product Officer for Google Home and Nest, in a candid post on X (formerly Twitter) addressing growing user dissatisfaction (e.g. commands not executing or smart devices not responding).

In the post, Kattukaran expressed regret over the current user experience and reassured that Google has been working on long-term fixes. He also hinted at “major improvements” coming in fall 2025, likely in sync with the wider rollout of Gemini-powered enhancements already previewed in other areas of Google’s smart-home system.

Users Report Multiple Failures in Home Assistant.

Smart-home users have experienced frustrating behavior such as voice commands being misunderstood, routines failing to execute, and devices not responding at all. These issues seem more severe compared to previous years, which has led to increased public criticism. In response, Kattukaran stated, "We hear you loud and clear and are committed to getting this right," and emphasized that Google is dedicated to creating a reliable and capable assistant experience.

He acknowledged that the current state does not meet user expectations and offered a sincere apology for the inconvenience. The company is working on structural improvements designed to stabilize performance and restore trust before rolling out more advanced features.

What to Expect from Upcoming Gemini Integration.

Google has already introduced limited Gemini-powered upgrades across its product ecosystem. These include smarter search capabilities and more natural language home automations. The promise of major improvements this fall suggests that Gemini will play a central part in improving Assistant reliability, responsiveness, and overall smart-home control.

Kattukaran’s message indicates that this update will go beyond surface tweaks to address deeper architectural issues. It could cover better camera integrations, improved routines, and more robust voice control across all Home and Nest devices. Google plans to reveal details in the coming months, possibly timed with its Pixel 10 launch event.

Why This Matters.

A trustworthy voice assistant is now expected to integrate seamlessly with everyday smart-home devices. When lights refuse to turn on or routines break, it disrupts the convenience and confidence users have come to expect. Google’s open acknowledgement of these issues demonstrates accountability. More importantly, the company’s Gemini-driven focus shows it recognizes that better AI is the next step toward restoring reliability across its ecosystem.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Google Photos Rolls Out AI Tools to Animate Images and Add Artistic Effects.

- Google Photos now lets users turn still images into short animated videos using AI-powered motion effects.

- The new Remix feature transforms photos into artistic styles like anime, sketch, and 3D, offering more creative freedom.

Google Photos is taking another step forward in creative photo editing by launching two innovative features: photo-to-video conversion and Remix. These tools are powered by Google's Veo 2 generative AI model and are being rolled out gradually for users in the United States on both Android and iOS devices. With this update, Google aims to give users more ways to creatively reimagine their memories using intuitive and powerful technology.

Bring Photos to Life with the Photo-to-Video Tool.

The new photo-to-video feature allows users to turn still images into short, animated video clips. You can choose between two effects, called “Subtle movements” and “I’m feeling lucky.” These effects gently animate parts of the photo, such as moving water, shifting clouds, or fluttering leaves. The final video clip lasts about six seconds, and the rendering may take up to one minute.

Users are given several variations to preview, so they can choose the one that suits their vision best. This feature is completely free and does not require access to Gemini or any paid plan.

Transform Images with the Artistic Remix Feature.

In addition to video animations, Google Photos is launching the Remix tool, which lets users apply artistic filters to their photos. These include styles like anime, sketch, comic, 3D animation, and more. The Remix feature is designed to be fun, expressive, and highly customizable. It will begin rolling out to users in the United States over the next few weeks, and it is intended to be simple enough for anyone to use, regardless of experience with photo editing.

To make these new tools easier to access, Google Photos will soon introduce a new Create tab. This tab will be located in the bottom navigation bar of the app and will organize creative tools such as photo-to-video, Remix, collages, and highlight reels in one convenient place. The Create tab is expected to be available starting in August.

Google Watermark on AI-Generated Content.

Google has stated that all content generated through these AI features will include a SynthID digital watermark. This watermark is invisible to the eye but helps verify that the media was created using AI. In addition to this, video clips created through the photo-to-video tool will display a visible watermark in one corner of the screen. Google is encouraging users to rate AI-generated content with a thumbs-up or thumbs-down to provide feedback and help improve the tools over time.

The photo-to-video animation feature became available to U.S. users on July 23, 2025. The Remix feature will become available in the coming weeks. The new Create tab is scheduled to roll out sometime in August. These features will be added automatically, but they may appear at different times for different users depending on regional availability and server updates.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Google Launches Gemini Drops Feed to Centralize AI Tips and Updates.

- Google has launched Gemini Drops, a dedicated feed for AI feature updates, tips, and community content.

- The new hub aims to improve user engagement by centralizing learning resources and real-time Gemini news.

Google has introduced Gemini Drops, a new centralized feed designed to keep users updated on the latest Gemini AI features, tips, community highlights, and more. This innovative addition aims to consolidate AI news and learning within a single, accessible space and represents a meaningful push toward making advanced AI tools more discoverable and engaging for users.

A Centralized AI Updates Hub.

Previously, updates about Gemini’s evolving features were scattered across blogs, release notes, and social media. Gemini Drops changes that by offering users a dedicated feed within the Gemini app or Google’s AI Studio environment. Here, you’ll find everything from major feature rollouts to helpful guides, all curated by Google to keep you informed and empowered.

Features & Community Spotlights.

Gemini Drops doesn’t stop at announcements; it’s a living educational hub. The feed includes:

- How-to guides for new tools like code integrations and real-time photo/video interactions.

- Community spotlights showcasing creative use cases or tutorials from fellow AI enthusiasts.

- Quick tips that help users leverage Gemini’s lesser-known abilities more effectively.

Gemini Drops: Why This Matters.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Perplexity CEO Dares Google to Choose Between Ads and AI Innovation

Key Takeaway:

- Perplexity CEO Aravind Srinivas urges Google to choose between protecting ad revenue or embracing AI-driven browsing innovation.

- As Perplexity’s Comet browser pushes AI-first features, a new browser war looms, challenging Google’s traditional business model.

In a candid Reddit AMA, Perplexity AI CEO Aravind Srinivas criticized Google's reluctance to fully embrace AI agents in web browsing. He believes Google faces a critical choice: either commit to supporting autonomous AI features that reduce ad clicks or maintain its ad-driven model and suffer short-term losses to stay competitive.

Srinivas argues that Google’s deeply entrenched advertising structure and bureaucratic layers are impeding innovation, especially as Comet, a new browser from Perplexity, pushes AI agents that summarize content, automate workflows, and offer improved privacy. He described Google as a “giant bureaucratic organisation” constrained by its need to protect ad revenue.

Comet, currently in beta, integrates AI tools directly within a Chromium-based browser, allowing real-time browsing, summarization, and task automation via its “sidecar” assistant. Srinivas warned that large tech firms will likely imitate Comet’s features, but cautioned that Google must choose between innovation and preservation of its existing monetization model.

Industry experts are watching closely as a new "AI browser war" unfolds. While Google may eventually incorporate ideas from Comet, such as Project Mariner, Srinivas remains confident that Perplexity's nimble approach and user-first subscription model give it a competitive edge.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

OpenAI Expands Infrastructure with Google Cloud to Power ChatGPT.

- OpenAI has partnered with Google Cloud to boost computing power for ChatGPT amid rising infrastructure demands.

- The move marks a shift to a multi-cloud strategy, reducing dependence on Microsoft Azure and enhancing global scalability.

OpenAI has entered into a major cloud partnership with Google Cloud to meet the rising computational demands of its AI models, including ChatGPT. This move, finalized in May 2025, reflects OpenAI’s ongoing strategy to diversify its cloud infrastructure and avoid overreliance on a single provider.

Historically, OpenAI has leaned heavily on Microsoft Azure, thanks to Microsoft’s multi-billion-dollar investment and deep integration with OpenAI’s services. However, with the explosive growth of generative AI and increasing demands for high-performance GPUs, OpenAI has been aggressively expanding its cloud partnerships. The addition of Google Cloud now places the company in a “multi-cloud” model, also involving Oracle and CoreWeave, which recently secured a $12 billion agreement with OpenAI.

By tapping into Google’s global data center network—spanning the U.S., Europe, and Asia—OpenAI gains greater flexibility to manage the heavy compute workloads needed for training and running its large language models. Google, for its part, strengthens its cloud business by onboarding one of the world’s leading AI developers as a client, which not only enhances its credibility but also diversifies its cloud clientele beyond traditional enterprise workloads.

This deal marks a significant step in the ongoing arms race among tech giants to dominate cloud-based AI infrastructure. OpenAI’s multi-cloud strategy ensures resilience, scalability, and availability for its services across different regions and use cases. It also allows the company to better respond to surges in demand for ChatGPT and its API-based offerings, which serve millions of users and enterprise clients daily.

The partnership underscores a broader shift in the tech industry, where high-performance computing for AI is becoming a core battleground. For OpenAI, spreading its workload across multiple providers could mitigate risks, lower costs, and boost its capacity to innovate and iterate at speed.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Google’s AI Can Now Make Phone Calls on Your Behalf

- Google's Gemini AI can now call local businesses for users directly through Search to gather information or book services.

- The feature uses Duplex technology and is available in the U.S., with opt-out options for businesses and premium access for AI Pro users.

Google has taken a major step forward in AI-powered assistance by rolling out a new feature in the U.S. that allows its Gemini AI to make phone calls to local businesses directly through Google Search. This tool, first tested earlier this year, lets users request information like pricing, hours of operation, and service availability without ever picking up the phone.

When someone searches for services such as pet grooming, auto repair, or dry cleaning, they may now see an option labeled “Ask for Me.” If selected, Gemini will use Google’s Duplex voice technology to place a call to the business. The AI introduces itself as calling on the user’s behalf, asks relevant questions, and then returns the response to the user via text or email.

This move transforms the search experience into a more active and intelligent assistant. Users can now delegate simple but time-consuming tasks like making inquiries or scheduling appointments. It’s part of Google’s broader strategy to make AI more agent-like, capable of taking real-world actions on behalf of users.

|

| Credit: Google |

Businesses that don’t want to participate in this feature can opt out using their Google Business Profile settings. For users, the functionality is available across the U.S., but those subscribed to Google’s AI Pro and AI Ultra plans benefit from more usage credits and access to advanced Gemini models like Gemini 2.5 Pro. These premium tiers also include features like Deep Search, which can generate in-depth research reports on complex topics using AI reasoning.

As AI integration deepens in everyday apps, this feature showcases a new phase of interaction, where digital tools not only inform but also act on our behalf. Google’s move reflects the future of AI as not just a search engine assistant, but a personal concierge for real-world tasks.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

I'm a full-time Software Developer with over 4 years of experience working at one of the world’s largest MNCs. Alongside my professional role, I run three blogs: AlgoLesson.com, where I simplify coding and data structures; WorkWithG.com, which focuses on Google tools, tutorials, and tech news; and DifferenceIz.com, where I explain the differences between various concepts in a clear and relatable way. I'm passionate about breaking down complex topics and making learning accessible for everyone.

Latest Google News, Updates, and Features. Everything You Need to Know About Google

Latest Google News, Updates, and Features. Everything You Need to Know About Google