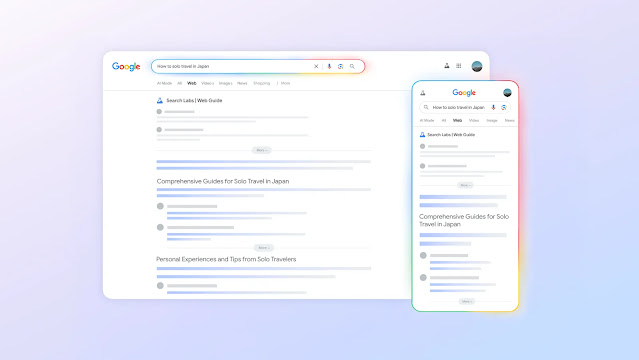

Change Default Search Engine on Desktop.

Step 1: Open Google Chrome Settings.

Step 2: Go to the search engine section.

Step 3: Managing and Manually Adding a Search Engine.

Change Default Search Engine on Mobile.

You can easily change the default search engine within the Google Chrome app on both Android and iOS devices. This setting controls the engine used when you type a query into the address bar (omnibox).

Here are the professional steps to change the default search engine on mobile:

Step 1: Open Chrome Settings.

Step 2: Navigate to the Search Engine.

Step 3: Select a New Default Engine.

The change will be applied immediately. All future searches performed by typing into the Chrome address bar will use the newly selected search engine.

By following these simple steps, users can efficiently customize their Google Chrome browsing experience to align with their privacy preferences, search result format requirements, or regional defaults. Regularly reviewing and managing your default search engine settings ensures your browser behaves optimally according to your specific needs.

.png)

Latest Google News, Updates, and Features. Everything You Need to Know About Google

Latest Google News, Updates, and Features. Everything You Need to Know About Google