- Android’s QR code scanner now features bottom-anchored controls and a polished launch animation for improved one-handed use.

- The redesign simplifies post-scan actions with “Copy text” and “Share” options integrated directly into the interface.

Google has quietly rolled out a refined user interface for Android’s built-in QR code scanner through a recent Play Services update. The refreshed design brings controls within thumb reach and streamlined animations, making scanning smoother and more intuitive on modern smartphones.

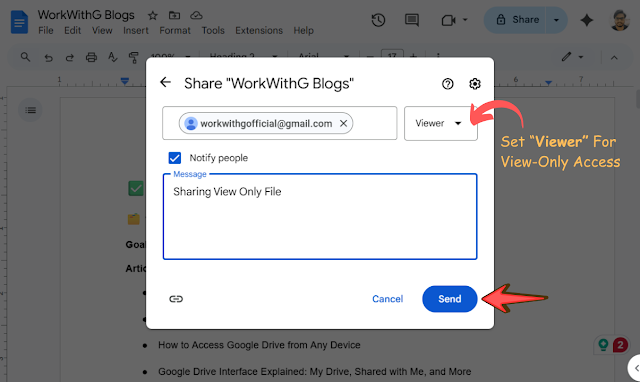

When users activate the QR scanner via the Quick Settings tile or on-device shortcut, they now see a brief launch animation featuring a rounded square viewfinder. Key buttons like flashlight toggle, feedback, and “Scan from photo” are consolidated into a single pill-shaped control near the bottom of the screen.

This layout contrasts sharply with the old format, where controls were placed at the top of the UI, which often made them hard to reach with one hand.

Once a QR code is detected, the scanner overlays the decoded content in a subtle scalloped circle centered on the viewfinder. The bottom panel now offers not only an “Open” option but also convenient “Copy text” and “Share” actions, eliminating the need to navigate away from the scanning screen.

This design refresh improves usability in real-world scenarios where users often scan QR codes with one hand while multitasking. By repositioning interaction points lower on the screen, the interface reduces strain and increases accessibility.

The new layout also adds functionality by including quick-choice options right after scanning. Whether opening the link, copying content, or sharing the result, users can act faster without leaving the app.

Although Google originally previewed this redesign in its May 2025 release notes for Play Services version 25.19, the visual overhaul is only now becoming widely available as part of the v25.26.35 rollout. Since the update is delivered via Google Play Services, users may need to restart their device or wait a few hours for it to appear even if they are on the latest build.

.png)

Latest Google News, Updates, and Features. Everything You Need to Know About Google

Latest Google News, Updates, and Features. Everything You Need to Know About Google